NIST Releases Second Draft of AI Risk Management Framework

On August 18, the National Institute of Standards and Technology (NIST) released a second draft of its Artificial Intelligence Risk Management Framework (the Second Draft) for public comment. The first draft was released in March of this year, discussed here. As described by NIST, the RMF is intended to be a "living document" that will be "readily updated" to reflect changes in technology and approaches to AI trustworthiness, especially as "stakeholders learn from implementing AI risk management generally and [the RMF] in particular."

At a high level, the RMF is "intended for voluntary use and to improve the ability to incorporate trustworthiness considerations into the design, development, use, and evaluation of AI products, services, and systems." "Trustworthy AI" systems will "help preserve civil liberties and rights, and enhance safety while creating opportunities for innovation and realizing the full potential of this technology." To that end, the RMF encourages organizations to adopt a risk mitigation culture that allocates resources according to the risk level and impact of AI systems while acknowledging that "incidents and failures cannot be eliminated."

One of the most significant changes in the Second Draft is a reformulation of the characteristics of trustworthy AI. The Second Draft moves away from the three-class taxonomy of "technical characteristics," "socio-technical characteristics," and "guiding principles" that were used in the first draft of the Risk Management Framework. Instead, the Second Draft lists seven elements of trustworthy AI, none of which are elevated above others but are all interrelated. The Second Draft explains that "addressing AI trustworthy characteristics individually will not assure AI system trustworthiness, and tradeoffs are always involved." The seven elements set out in the Second Draft are:

- 1. Valid and Reliable. Validity is described as a combination of accuracy and robustness, which is in turn described as the ability of an AI system to maintain its level of performance under a variety of circumstances. Reliability is described as the ability of an AI system to perform as required for a given time interval under given conditions.

- 2. Safe. The Second Draft explains that "AI systems 'should not, under defined conditions, cause physical or psychological harm or lead to a state in which human life, health, property, or the environment is endangered.'"

- 3. Fair – and Bias Is Managed. Fairness is described as concerning equality and equity. NIST also further incorporated the findings of its Special Publication on identifying and mitigating bias in AI (discussed here). As described in that document and reiterated in the Second Draft, "NIST has identified three major categories of AI bias to be considered and managed: systemic, computational, and human, all of which can occur in the absence of prejudice, partiality, or discriminatory intent."

- 4. Secure and Resilient. Resilience is described as "AI systems that can withstand adversarial attacks or, more generally, unexpected changes in their environment or use, or to maintain their functions and structure in the face of internal and external change, and to degrade gracefully when this is necessary." The Second Draft also explains that security includes resilience but also includes protocols that avoid and protect against attacks and that "maintain confidentiality, integrity, and availability through protection mechanisms that prevent unauthorized access."

- 5. Transparent and Accountable. Transparency refers to the extent to which information is available to individuals about an AI system and is critical to ensuring fairness and eliminating bias. The Second Draft also explains that "determinations of accountability in the AI context relate to expectations of the responsible party in the event that a risky outcome is realized."

- 6. Explainable and Interpretable. Per the Second Draft, "explainability refers to a representation of the mechanisms underlying an algorithm's operation, whereas interpretability refers to the meaning of AI systems' output in the context of its designed functional purpose," both essential to effective and responsible AI.

- 7. Privacy-Enhanced. The Second Draft posits that "privacy values such as anonymity, confidentiality, and control generally should guide choices for AI system design, development, and deployment." Citing NIST's privacy framework, the goal is to ensure that AI systems safeguard "freedom from intrusion, limiting observation, [and] individuals' agency to consent to disclosure or control of facets of their identities (e.g., body, data, reputation)."

The Second Draft maintains the four high-level elements of AI risk management contained in the first draft, i.e., Mapping, Measuring, Managing, and Governing. In the Second Draft, the Govern function is elevated to the first position in the management framework. NIST emphasizes that "Govern is a cross-cutting function that is infused throughout AI risk management and informs the other functions of the process."

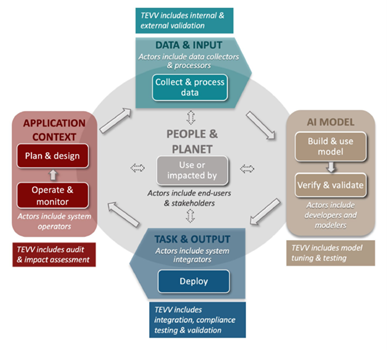

The Govern function also explains that responsibility for various AI management functions should be clearly defined and delegated. The Second Draft includes new descriptions in Figure 1 (reproduced below) and Appendix A that contain clearer explanations of who the audience of the RMF is and the roles and responsibilities different individuals and groups can play in the risk management process. "The AI RMF should allow for communication of AI risks across an organization, between organizations, with customers, and to the public at large."

The Second Draft also emphasizes the importance of testing, evaluation, verification and validation, or "TEVV," throughout the AI design, development, and deployment lifecycle. NIST explains that actors with expertise in TEVV should be integrated at various stages of the risk management process.

Figure 1: Lifecycle and Key Dimensions of an AI System at page 5 of the Second Draft

Alongside the Second Draft, NIST has also published a Playbook that it describes as an online resource intended "to help organizations navigate the AI RMF and achieve the outcomes through suggested tactical actions they can apply within their own contexts." NIST is also soliciting comments on the Playbook and explains that Playbook users can contribute their suggestions for inclusion to the broader Playbook community. Like the RMF, the Playbook "is intended to be an evolving resource, and interested parties can submit feedback and suggested additions for adjudication on a rolling basis."

Conclusions

NIST will accept comments on the second draft until September 29, 2022, will hold a workshop on October 18-19, 2022, and then expects to publish the final version of the RMF and Playbook in January 2023. NIST has encouraged a variety of stakeholders to submit comments on the drafts of the RMF. Although NIST explicitly explains that the RMF is not a mandatory regulatory framework, it is likely to significantly influence industry standards, much like NIST's influential cybersecurity guidelines. As such, organizations that develop or use AI systems should advise and assist NIST in the process of developing the RMF.