Understanding "Trustworthy" AI: NIST Proposes Model to Measure and Enhance User Trust in AI Systems

Advances in artificial intelligence (AI) come with the promise of life-changing improvements in technologies such as smart buildings, autonomous vehicles, automated health diagnostics, and autonomous cybersecurity systems. But AI developments are not without challenges—and balancing the promise of technological advances with the importance of user trust is key to successful AI-enabled product deployment and uptake.

As we use AI to perform a myriad of tasks, the technology "learns" by ingesting additional data and then updating its decisions. Given the "complexity and unpredictability" of AI interactions with users, developing and enhancing user trust in this technology is critical to long-term adoption of AI systems and applications. To address this challenge, the National Institute of Standards and Technology (NIST) is continuing its work on AI issues and joining other efforts focused on evaluating and enhancing the trustworthiness of AI systems.

Following its recent workshop exploring issues of bias in AI, NIST recently announced an initiative to evaluate user trust in AI systems in its draft NIST Interagency Report (NISTIR 8332) on Trust and Artificial Intelligence. The objectives of the draft report are to stimulate discussion about human trust in AI systems and investigate how to foster trust in AI with the general public.

Emphasizing the importance of user trust, the draft report proposes a model to evaluate factors in an individual's trust in an AI system, which is necessarily dependent on the "decision" the AI system is making for the user.

Distinguishing the Broader NIST Effort

Notably, the draft report is one piece of a larger body of work by NIST on AI technical standards and studies to advance trustworthy AI systems. But unlike the broader NIST effort, the draft report, as characterized in the press release, centers on understanding how users experience trust when using AI systems or when affected by such systems: "The report contributes to the broader NIST effort to help advance trustworthy AI systems. The focus of this latest publication is to understand how humans experience trust as they use or are affected by AI systems."

Importantly, user trust is different from technical requirements of trustworthy AI. The latter concept is analyzed in other efforts like the European Commission Assessment List for Trustworthy AI and the European Union Regulation on Fostering a European Approach to Artificial Intelligence. Operational or technical trust ensures that "AI systems are accurate and reliable, safe and secure, explainable, and free from bias."

User trust is focused on "how the user thinks and feels about the system and perceives the risks involved in using it." Evaluating user trust reflects the fact that a person may weigh the technical factors differently "depending on both the task itself and the risk involved in trusting the AI's decision."

User Trust in AI Systems Presents Unique Challenges

Understanding user trust in AI presents challenging issues, in part because consumer excitement about new technologies often lags behind consumer adoption. Even when informed that an AI system can be trusted as valid, the user may still determine the AI system is not trustworthy.

Additional complexity is presented by the nature of AI systems. Unlike other advanced technologies, AI-enabled products and services pose unique challenges for user trust. Specifically, AI-enabled products and services utilize machine learning and other methods to discover patterns in data, make recommendations, or take actions in ways that are not always easily understood, predicted, or explained to consumers.

Modeling Human Calculus: Nine Factors for User Trust

NIST's report raises the fundamental question of whether human trust in AI systems is measurable—and if so, how to measure it accurately and appropriately. To evaluate trustworthiness, the draft report proposes to utilize nine factors, or characteristics, that contribute to a person's trust in AI.

While the draft report does not provide definitions of the nine factors beyond the modeled formulas, the 2019 NIST U.S. Leadership in AI report (and NISTIR 8074 Volume 2) is credited with the introduction of the factors, as described below.

- Accuracy: Accuracy is an indicator of an AI system's performance. Perceived accuracy will depend on the quality of an AI system for a particular purpose.

- Reliability: Reliability is the ability of a system to perform its required functions under stated conditions for a specified period of time. The NIST publications cite ISO/IEC 27040:2015 for this definition.

- Resiliency: Resiliency can be defined as the adaptive capability of an AI system in a complex and changing environment. The term may also refer to the ability to reduce the magnitude or duration of disruptions to an AI system.

- Objectivity: Objectivity can refer to an AI system that uses data that has not been compromised through falsification or corruption.

- Security: Security most often refers to information security. Information security involves the use of physical and logical security methods to protect information from unauthorized access, use, disclosure, disruption, modification, or destruction.

- Explainability: Explainability refers to how AI system results can be understood by humans. Explainable AI contrasts with the "black box" concept in machine learning making it impossible to explain why an AI system made a decision.

- Safety: Safety is the condition of the system operating without causing unacceptable risk of physical injury or damage to the health of people. This definition is cited in the above NIST publications from the ISO/IEC Guide 55:1999.

- Accountability: Accountability involves traceability or providing a record of events related to the specific use case. Accountability tools can enhance documentation gaps between planned and achieved outcomes of AI systems.

- Privacy: Privacy will differ by use case and depends on the type of data at issue and other considerations like the societal context for the use case. Privacy considerations involve collection, processing, sharing, storage, and disposal of personal information.

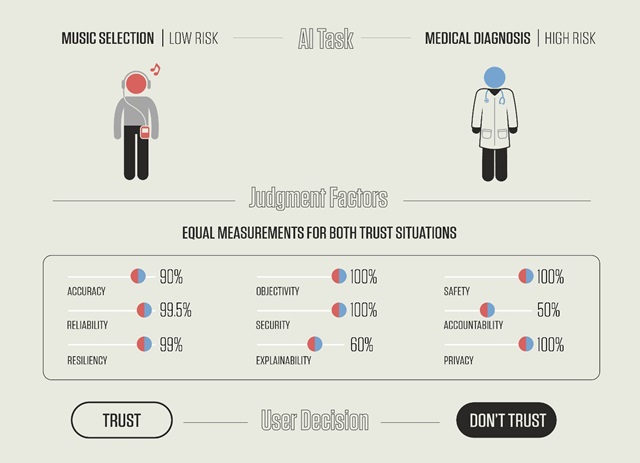

The weight an individual places on the combination of these nine factors may change, depending on how the individual is using the AI system and the level of risk involved in that particular use. For example, user trust in a low-risk application, such as a music selection algorithm, may be tied to certain factors that differ from those factors influencing user trust in a high-risk application, such as a medical diagnostic system.

NIST offers the following graphic to illustrate how a person may be willing to trust a music selection algorithm but not the AI "medical assistant" used to diagnose cancer:

Credit: N. Hanacek/NIST.

Using the model equation as a starting point, the draft report calls for further research into measurement precision for user trust. To this end, the draft report includes a review of the previous research on which the model is based.

In particular, psychologist Brian Stanton who co-authored the draft report with NIST computer scientist Theodore ("Ted") Jensen, stressed that, "We are proposing a model for AI user trust. It is based on others' research and the fundamental principles of cognition. For that reason, we would like feedback about work the scientific community might pursue to provide experimental validation of these ideas."

Participation Needed

As a practical matter, NIST routinely calls for participation from industry to develop its legal frameworks. For example, NIST relied upon significant feedback from industry to develop the NIST Cybersecurity Framework, and numerous organizations now voluntarily comply with the recommended standards.

Similarly, in the context of AI, it is mutually beneficial for industry to participate and NIST to incorporate such input when formulating final recommendations for AI that helps to build consumer trust in the technology. The draft report is open for comment until July 30, 2021.

Please contact any of the attorneys identified herein if you have questions or would like to comment on the draft report.

This article was originally featured as an artificial intelligence advisory on DWT.com on June 17, 2021. Our editors have chosen to feature this article here for its coinciding subject matter.